RECENTLY READ

UPCOMING READING

Zero-shot Text-to-SQL Learning with Auxiliary Task

Jan 10, 2021 DL sql nlp lstm

Table of Contents

Summary

With the recent success of neural seq2seq models for text to SQL translation, some questions are raised in regards how models generalize with unseen data.

The authors diagnose the bottleneck and propose a new testbed. Additionally the authors designed

a simple but effective auxil-iary task, which serves as a supportive modelas well as a regularization term to the gener-ation task to increase the models generalization.

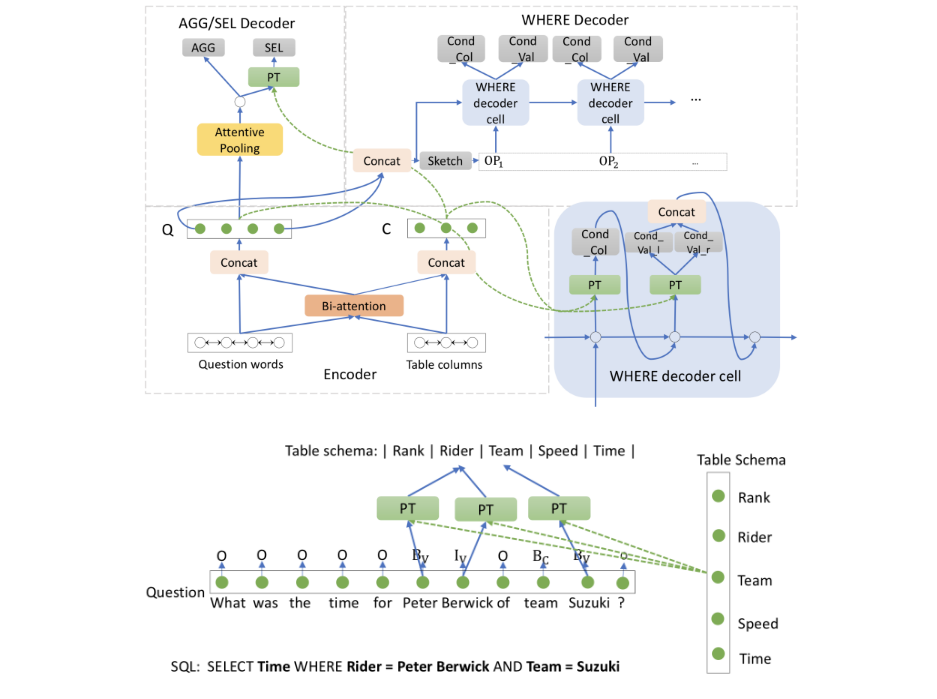

Figure 3: Illustration of our model. The upper figure

is the text-to-SQL generation model which consists of threeparts: encoder (lower left),

AGG/SEL decoder (upper left) and where decoder (upper right).

Lower right isWHEREdecoder cell. The bottom figure is our auxiliary mapping model with the

ground-truth label of an example. Questionword is mapped to a column only when it is tagged

as part of a condition value (BvorIv).

Figure 3: Illustration of our model. The upper figure

is the text-to-SQL generation model which consists of threeparts: encoder (lower left),

AGG/SEL decoder (upper left) and where decoder (upper right).

Lower right isWHEREdecoder cell. The bottom figure is our auxiliary mapping model with the

ground-truth label of an example. Questionword is mapped to a column only when it is tagged

as part of a condition value (BvorIv).

Metadata:

| Author: | Shuaichen Chang, Pengfei Liu, Yun Tang, Jing Huang, Xiaodong He, Bowen Zhou |

| Released: | Sep 29, 2019 |

| Source: | Link |