RECENTLY READ

UPCOMING READING

Batch Normalization Accelerating Deep Network Training by Reducing Internal Covariate Shift

Jan 10, 2021 NN layers learning rate

Table of Contents

- Summary

- Differences to input Normalization

- Batch Normalization for CNN and other networks

- Accelerating a BN Network

Summary

Neural Network are generally hard to train. Changing the parameters of one layer changes the input distribution for the following layer. This change in distribution is called a covariant shift.

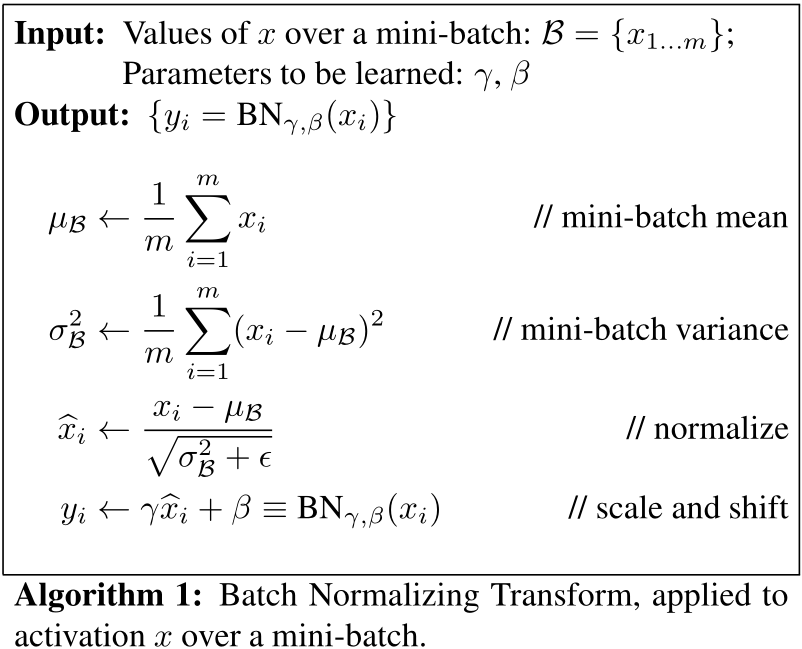

To reduce this needed adaptation of the layer to the covariant shift, the authors present “Batch Normalization” (BN). This method normalizes the data for each mini-batch, between the layers. This allows to use a higher learning rate, be less careful about the initialization and can act as regularizer.

Differences to input Normalization

Normalizing each input of a layer can change/reduce the capabilities what the layer can represent. “Batch Normalization” addresses this by making sure that the transformation inserted in the network can represent the identity transform.

BN transform is a differentiable transformation that introduces normalized activations into the network. This ensures that as the model is training, layers can continue learning on input distributions that exhibit less internal co- variate shift, thus accelerating the training. Furthermore, the learned affine transform applied to these normalized activations allows the BN transform to represent the iden- tity transformation and preserves the network capacity.

Batch Normalization for CNN and other networks

Generally BN can be applied to any set of activations in a network.

Accelerating a BN Network

Just adding BN is not a magical tool that will increase the performance of a network. The authors recommend additional changes to use the full advantage of BN:

- Increase learning rate

- Remove Dropout

- Reduce the L2 weight regularization

- Accelerate the learning rate dacay

- Remove Local Response Normalization

- Shuffle training examples more thoroghly

- reduce the photometric distortions

But adding BN just to a state-of-the art network, can yield a speedup in the training.

Metadata:

| Author: | Sergey Ioffe, Christian Szegedy |

| Released: | Feb 1, 2015 |

| Source: | Link |